So I left off last time with simple benchmark results having moved my test VM around from one type of storage to the next. If you haven’t read that article, here is a link for you to catch up on. As you may recall we just wrapped up measuring throughput on the various storage types (two SSDs, an eight-disk RAID10 array, and a five-disk RAID5 array). Now, we’ll be measuring the IOPS (I/O Operations Per Second) that these storage types are capable of.

If you read up on storage solutions, you’ll find that many people don’t think that IOPS matter. In fact, one of the most popular hits when searching is an article titled, “Why IOPS don’t matter“. While I can agree with some of what the article says, I cannot agree with it wholly. Their claim is that IOPS as a whole are irrelevant as benchmark profiles will test high for some systems and low for others. This is true. They also make mention that the average block size for IOPS benchmarks is less than 10KB while a very low percentage of files on production disks are that small. Agreed. It states that you should “run the application on the system” and not benchmarks on the system – eh, this is where I start to disagree. The article goes down the path of recommending that you measure application metrics rather than storage metrics. Evaluate the application as a whole and not just the storage systems ability to churn benchmark numbers out.

The thing is, if the system is incapable of churning out 10,000 IOPS then it’ll never be able to support a database with 500 concurrent sessions pulling 20 transactions a second. A queue will build, latency will go up, and the application will drag. Now, a system with 500 sessions making 20 transactions a second is a pretty busy system, but if you didn’t run a benchmark and be able to meet that minimum, who cares about measuring the application? And further, how could you measure the application performance without already purchasing a back-end storage solution and then if it were to fall short, what are you to do at that point?

So anyway, I believe that measuring your basic performance attributes with synthetic benchmarks is the only way to ballpark a solution. Sure, in some cases, IOPS don’t matter – sometimes throughput is key, or sometimes latency, etc. We’ve already got throughput figures, so here come the IOPS.

To perform this test in a VM can be tricky. Not all benchmark utilities allow testing against a virtual hard disk. So, to avoid any issues, I’ve found a PowerShell script that will test and output figures. For details on the script, follow this link. For testing IOPS you’ll want to make sure you specify Get-SmallIO in the command, otherwise the block size in the test will be large and you’ll be testing throughput.

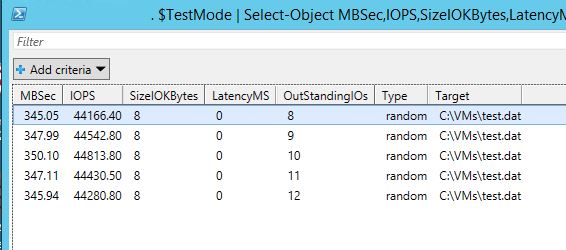

First up is the Crucial MX100 256GB aka “SSD1”: Above you’ll see a healthy 44,000 IOPS which is nice. But, you might have noticed that a Crucial MX100 256GB SSD is supposed to be capable of 85,000 IOPS. Looking closer you’ll see that the SizeIOKBytes shows as “8” so we can see that this script is running 8KB block tests but most IOPS ratings are in reference to 4KB blocks. If we edit the script to use 4KB blocks, let’s see what happens:

Above you’ll see a healthy 44,000 IOPS which is nice. But, you might have noticed that a Crucial MX100 256GB SSD is supposed to be capable of 85,000 IOPS. Looking closer you’ll see that the SizeIOKBytes shows as “8” so we can see that this script is running 8KB block tests but most IOPS ratings are in reference to 4KB blocks. If we edit the script to use 4KB blocks, let’s see what happens:

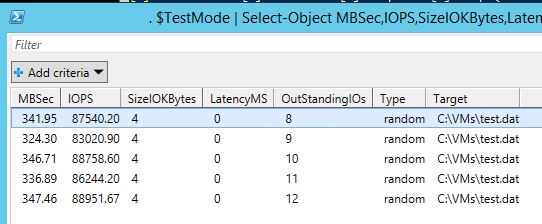

That’s more like it – 83,000 IOPS at its lowest. That is more in line with what we’d expect to see of a Crucial MX100 256GB. Next is the Samsung 850 Evo 250GB, aka “SSD2”:

That’s more like it – 83,000 IOPS at its lowest. That is more in line with what we’d expect to see of a Crucial MX100 256GB. Next is the Samsung 850 Evo 250GB, aka “SSD2”:

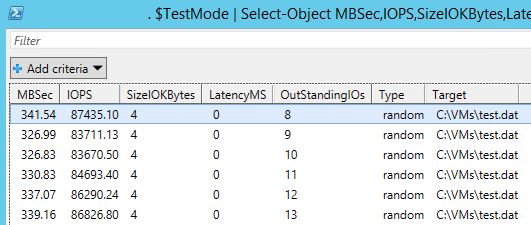

This is pretty similar all in all – why is that when the 850 Evo is rated higher? Queue depth. Random 4K block reads with a queue depth of “1” is anywhere from 11,000 – 90,000 IOPS. But, a queue depth of 32 would result in 197,000 IOPS. Unfortunately, I don’t have anyway to build a deep queue with this script, but that’s OK because we’re most interested in single random 4KB block reads/writes anyhow. Now the RAID10:

This is pretty similar all in all – why is that when the 850 Evo is rated higher? Queue depth. Random 4K block reads with a queue depth of “1” is anywhere from 11,000 – 90,000 IOPS. But, a queue depth of 32 would result in 197,000 IOPS. Unfortunately, I don’t have anyway to build a deep queue with this script, but that’s OK because we’re most interested in single random 4KB block reads/writes anyhow. Now the RAID10:

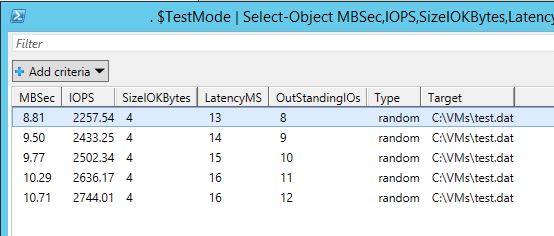

As expected, much lower IOPS in the eight-disk RAID10 array when compared to either SSD. There’s much higher latency as well (the SSD’s have 0 latency because the true latency is in the nano-seconds range) of around 13 – 16ms. And finally, the RAID5 array:

As expected, much lower IOPS in the eight-disk RAID10 array when compared to either SSD. There’s much higher latency as well (the SSD’s have 0 latency because the true latency is in the nano-seconds range) of around 13 – 16ms. And finally, the RAID5 array:

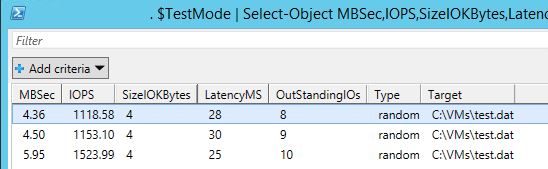

This five-disk RAID5 array scores about 1/2 the IOPS of a eight-disk RAID10 which is about right. Write IOPS would be a more significant difference, but again we’re just hitting this high-level and sticking to reading random 4KB blocks.

This five-disk RAID5 array scores about 1/2 the IOPS of a eight-disk RAID10 which is about right. Write IOPS would be a more significant difference, but again we’re just hitting this high-level and sticking to reading random 4KB blocks.

One thing that might be skewing the results is cache – the LSI 9260-8i that the RAID10 is on has a BBU supporting write-back cache (512MB DDR3). And, further, the RAID5 is in a Synology DS1513+ which may or may not have some caching. I would expect that it does have some cache skewing the results because 1100 – 1500 IOPS with 5 disks even with a healthy 120 IOPS per disk would be a 600 – 700 IOPS… not 1100 – 1500. So there must be some skewing going on there. But, alas, even with whatever caching is going on, the IOPS are a telltale of performance overall.

I’ll try and figure out a way to quantify the speed differences between the storage, but for the most part I think anyone here can come to the obvious conclusion… IOPS matter. I say that because if you remember the throughput tests between the RAID10 and the SSD(s) you’ll remember that the read/write benchmarks were very similar. We know that the throughput is similar between the two types, but the IOPS could not be further apart. For a file server or large-file access instance, the SSD and RAID10 array have similar throughput and the benefit is that the RAID10, though more expensive in the long-run (controller, 8 disks) is 14X the size. The IOPS, however, are literally ~2.5%! But, if we were to say that IOPS do not matter and just “measure the application” – we might be very, very disappointed, and would be spending good money after bad.

This wraps ups my brief storage test. Again, we know that SSDs are IOPS- and throughput-kings, but running these tests from within the VM itself is great because it takes into account any losses through the hypervisor and virtual layer. I see a lot of people concede, “…well, the SSD is fast, but you lose some of the speed to overhead in the hypervisor, etc., so… ” – No! If the storage is packed full of chatty VM’s, sure, there’s going to be some contention on the disk, but there should be very minimal losses storing data on an ESXi host with SSDs vs. storing data in a Windows 2012 R2 server with SSDs.

If you guys have any suggestions for pushing these tests in another direction please comment! I have plenty of space and ability to mix and match different configurations and can test different strategies. Next up for me will be a comparison just like the one conducted here, except against an eight-disk RAID10, RAID50, and RAID60 array with Western Digital Caviar Red 4TB disks. Look for that writeup soon!

I am a Sr. Systems Engineer by profession and am interested in all aspects of technology. I am most interested in virtualization, storage, and enterprise hardware. I am also interested in leveraging public and private cloud technologies such as Amazon AWS, Microsoft Azure, and vRealize Automation/vCloud Director. When not working with technology I enjoy building high performance cars and dabbling with photography. Thanks for checking out my blog!

I am a Sr. Systems Engineer by profession and am interested in all aspects of technology. I am most interested in virtualization, storage, and enterprise hardware. I am also interested in leveraging public and private cloud technologies such as Amazon AWS, Microsoft Azure, and vRealize Automation/vCloud Director. When not working with technology I enjoy building high performance cars and dabbling with photography. Thanks for checking out my blog!