Finally getting around to starting my series on vRealize Automation! Are you as excited as I am?

This initial post is just going to cover the very high level basics around defining what vRealize Automation is and is not. I often see people in forums (and in meetings) confused about what vRealize Automation is trying to provide and when it should be leveraged.

So, what is vRealize Automation?

When it you boil all of the “vJargon” out, vRealize Automation is a platform for providing end-users a controlled self-service portal for not only VM-provisioning but solution-provisioning. If you’re thinking, “Yeah but I can create roles in vCenter and let people provision VMs” then hang on, because there’s more to it than that. Basically, vRealize Automation takes the building blocks that are vCenter and ESXi and puts the icing on top.

The most common use for vRealize Automation is to give developers a pool of resources, transparent to the users themselves, to which they can deploy and manage their own VMs. However, you can create blueprints that include 3-tier solutions (think DB VM, App VM, Web VM…) that not only provisions VMs but also creates networks, firewall/rules, load balancers, etc. with the integration of VMware NSX. Maybe your company develops an application and every time a developer needs a test platform it takes 4 VMs, a VLAN/Network, etc. to get them going – give them a blueprint that provisions the whole solution and be more productive!

Did I mention that the resources that end-users deploy to can include more than just vSphere? You can configure end-points in Azure, AWS, OpenStack, KVM, Hyper-V, and more. It would be hard to train users to know how to deploy in AWS vs. Azure vs. KVM vs. vSphere, but with vRealize Automation you can obfuscate the platform and the user can work within one common interface. Powerful stuff.

Perhaps you have a development vCenter/Cluster that is riddled full of VMs with no obvious ownership all sitting there wasting resources because people have come and gone and not bothered to transition their test environments or decommission things when development is done. vRealize Automation makes it easier to get a hold of who owns what because when a blueprint is provisioned it is tied to the user (and can be assigned to another user Day 2) and includes a lease time so that nothing exists forever.

For instance, one thing I have found to be tremendously useful is to create VM blueprints with lease times set with a minimum of 1 day and a maximum of 180 days. This means that at most, a VM can live forgotten and abandoned for 180 days before being archived/deleted. A user can extend the lease without issue proving that they still need the VM (and you can even set approval policies), but you won’t have VMs sitting around forever. Expiration of VMs is handled through emails and the VMs can be archived (left powered off after expiration) for however long you want before being deleted.

It gets even better – VMware has provided a ton of functionality for deploying VMs right out of the box and makes use of templates and customization files that you’re probably already using right out of vCenter. But, they’ve also allowed for custom properties to be defined. For instance, one scenario I have in my lab is that I want to be able to provision VMs to several different portgroups (VLANs) – so, I’ve allowed my users to have access to said portgroups through reservations and then added a property to the blueprints called VirtualMachine.Network0.Name and with a few other tweaks the users can now deploy VMs to select networks.

There’s so much more vRealize Automation can do, but this should give you a good idea of what it’s trying to accomplish.

What makes up a vRealize Automation environment?

There are a few components that make up the actual vRealize Automation solution. As of this writing, I’ll be referring to vRealize Automation 7.3/7.4 architecture but there are some aspects that are expected to change in the future. Right now the core components are:

- vRealize Automation Appliance (deployed from OVF)

- IaaS Server (Windows Server acts as Web Server, Model Manager, Manager Service, Distributed Execution Manager, optional SQL database)

- SQL Database

The vRealize Automation Appliance is responsible for all things SSO, has an embedded vRealize Orchestrator instance, a preconfigured Postgres database for handling operations, and contains the Management Agent Installer where your IaaS servers will point to for installation.

The Infrastructure as a Service (IaaS) node is a Windows server that is responsible for provisioning across public and private cloud resources. Within the IaaS node there are several components. The Web Server component obviously provides web access to the other components related to provisioning. The Model Manager is responsible for versioning, security, persistence, etc. in and revolving around models that facilitate integration with external systems and databases. The Manager Service is a Windows service that orchestrates functionality between the DEM(s), SQL database, and SMTP. The Distributed Execution Manager (DEM) is basically the main worker or orchestrator of workflows – for instance, if you find that your vRealize Automation environment has too many concurrent workflows running (provisioning, reconfiguring, etc.) then you might deploy additional DEM nodes.

There are a few more minor components to the IaaS node (agents, VDI agents, etc.) but the ones listed above are the main, important requisite components.

Does it scale?

First things first – as usual VMware provides configurations for all sizes – so yes, it scales. There are two types of deployments consisting of a Minimal Deployment and Enterprise Deployment. Minimal deployment is really geared toward proof-of-concept or lab environments and really shouldn’t be used for production because there is no high-availability by nature. Enterprise deployment is a bit of a wildcard because there are varying degrees of complexity that can be deployed. Let’s take a look at a few examples.

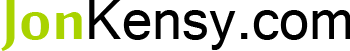

Here is what a typical Minimal Deployment might look like:

You can see that all of the components for the IaaS node (light blue) are on one box. The illustration above even goes so far as to include the SQL Server Database on the same IaaS server. You can see that there is no high-availability present in a Minimal Deployment.

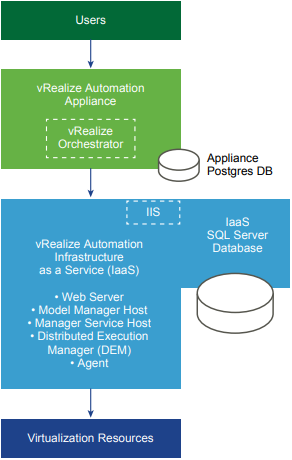

The next example will depict how we can move some of the IaaS node components out from a single server using Enterprise Deployment:

The above depiction allows for better resource allocation should your environment need more or less allocated resource on any given component, but more importantly it allows you to deploy additional DEM workers which will provide for more concurrent scheduling of workflows and deployments.

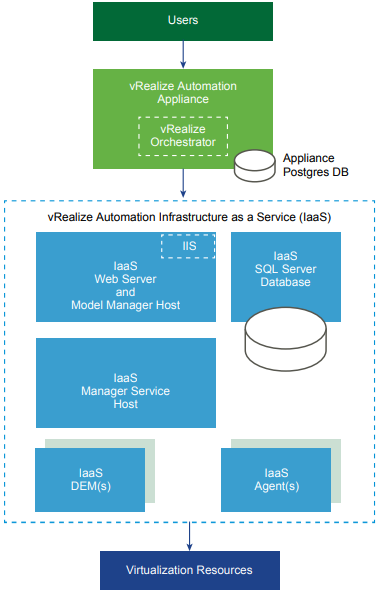

Finally, there’s the “real” Enterprise Deployment:

Now we’re talking! In the diagram above you can see we’ve now got load balanced N vRealize Automation appliances for HA and scaling, kicking out orchestration requests to load balanced vRealize Orchestrator appliances, all while passing further processing on through load balanced Web Servers, Manager Service Hosts, to N DEM Workers, and on and on. We’ve got high-availability all day long. One thing worth noting is that the Model Manager can only run on one Web Server host at a time – there is a fail-over mechanism I believe but I recall it being a manual fail-over.

Is it multi-tenant?

It is multi-tenant compatible but probably not in the way you’re imagining or at least not in the way that I can deem “multi-tenant”. This is one thing I think VMware did a poor job explaining unless I still do not understand it myself.

While vRealize Automation does support multi-tenancy it does so while catering to only one “organization” or company. What does this mean? Well, I can create an “organization” (as VMware calls it in vRealize Automation) and bind it to an Active Directory domain for SSO. However, the vRealize Automation appliance and IaaS node(s) will need to be able to work within this domain and more specifically they need to be able to interface with the vSphere infrastructure for that “organization”. So, do you really want to have a platform that has network access to both Active Directory and vSphere from some central location to multiple client organizations?

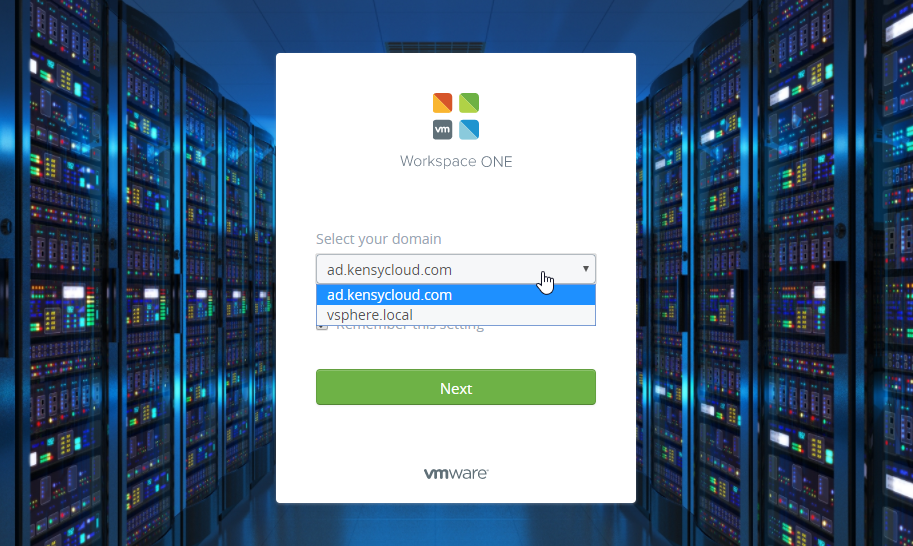

More over, the way you access an “organization” is by a specific URL behind the vRealize Automation appliance. For instance, I have an organization called “KensyCloud” that is accessible from https://cloud.kensycloud.com/vcac/org/kensycloud. This URL is not on the internet, but it would need to be reachable from the client “KensyCloud”. But here’s my gripe – if I stumbled across this portal either accidentally or through searching, I can figure out what the domain is setup for the organization and may be able to determine the name or details of the client. Check the following image out:

So, above you can see that if I happen to hit the URL listed previously, I can “select a different domain” and see all of the SSO domains linked to this organization. Sad face.

I’ve heard people recommend using arbitrary organization URLs. Instead of having https://cloud.kensycloud.com/vcac/org/kensycloud you might have https://cloud.kensycloud.com/vcac/org/org184810b33 but obviously this means you need to maintain some sort of cross-reference list to map org IDs to clients. This also doesn’t truly fix the issue, though, because if you were to hit the URL as https://cloud.kensycloud.com/ you could access the main vRealize Automation landing:

If you click the link pictured above you will be taken to the default tenant login page where…

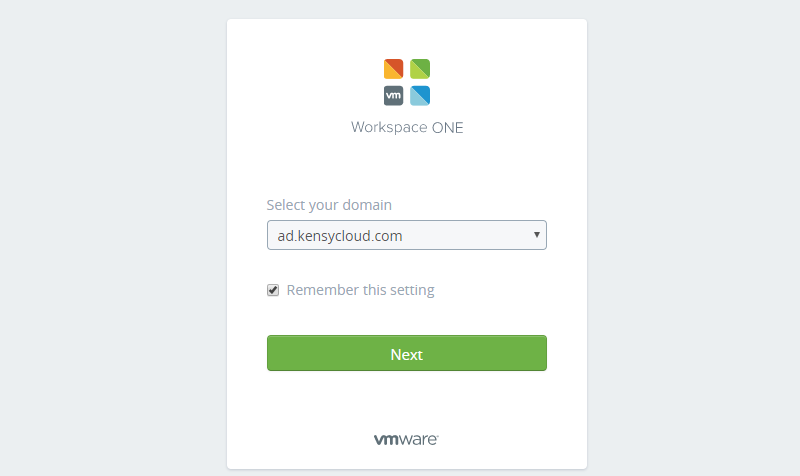

Oh no! We can see domain information again. I happen to let ad.kensycloud.com act as an authentication source for the default tenant (where do you configuration things) but you can see how this is less than ideal.

In my opinion, while it does support multiple “tenants”, I wouldn’t try deploying a single vRealize Automation infrastructure across multiple customers. If you work for a large company with many divisions or logical business units, then yes, vRealize Automation can cater to that for segregation of resources at a high level. You can, however, deploy VMs into multiple clients – so if you had a cloud platform and wanted your vSphere admins to use vRealize Automation to handle VM deployments you can totally do that but I wouldn’t give users from each customer access to vRealize Automation, personally – vCloud Director handles that a bit better in my opinion.

How does it handle multiple vSphere targets?

This will be the final section for my introduction on vRealize Automation, but it’s an important one and covers an important concept.

So, how does vRealize Automation handle multiple vSphere targets? What if I had a vCenter/cluster for business units (marketing, finance, R&D, engineering, etc.) or divisions of a company? Simple – entitlements and reservations.

Entitlements and reservations are the two mechanisms vRealize Automation utilizes as a sort of traffic cop for user deployments. The best part about this is that reservations are completely transparent (by default) to users and entitlements restrict their access to only blueprints or solutions they’re allowed to use.

Example: I may want R&D to be able to spin up their own VMs and solutions against a vCenter server containing hosts or clusters that are not design-validated and are more of a development environment. I would create a vSphere Endpoint for that vCenter and setup reservations for the “Business Group” called “R&D” and entitle them to, say, a CentOS 7 VM deployment. The VMs they request will only deploy against that given vCenter and the reservation I setup for them will restrict how much memory and disk space they can use, what network their VMs will deploy to, etc.

What if I wanted Engineering to be able to deploy to that same vCenter that R&D uses because they often help R&D out, but I also want Engineering to be able to provision VMs to a different, production vCenter? Simple – create the two vSphere end-points but this time create reservations for Engineering for both the non-validated vCenter/Cluster and the validated production vCenter. Then, in the blueprint specifically entitled to Engineering, allow them to specify the “Location” of the CentOS 7 deployment – this will allow Engineering to decide where the VM get’s deployed at the time of the request.

This is a very simple example but you can see how entitlements and reservations essentially form the fabric of vRealize Automation deployments. You can even go so far as to provide reservations on networks/network profiles which will allocate IPs to VMs and maintain them in vRealize Automation IPAM solution. It’s very powerful!

In future articles we’ll go through actually deploying vRealize Automation and configuring/deploying different VMs/blueprints/solutions.

For now, I hope you enjoyed this article and feel free to leave a comment or question!

I am a Sr. Systems Engineer by profession and am interested in all aspects of technology. I am most interested in virtualization, storage, and enterprise hardware. I am also interested in leveraging public and private cloud technologies such as Amazon AWS, Microsoft Azure, and vRealize Automation/vCloud Director. When not working with technology I enjoy building high performance cars and dabbling with photography. Thanks for checking out my blog!

I am a Sr. Systems Engineer by profession and am interested in all aspects of technology. I am most interested in virtualization, storage, and enterprise hardware. I am also interested in leveraging public and private cloud technologies such as Amazon AWS, Microsoft Azure, and vRealize Automation/vCloud Director. When not working with technology I enjoy building high performance cars and dabbling with photography. Thanks for checking out my blog!